CSCE 641 Research Project (Spring 2023)

"MVDES: Multi-View Depth Estimation Sandbox"

Reconstructing 3D information from 2D images is one of the most important problems in computer vision. Several variants of the problem exist; an important one is multi-view depth estimation, in which algorithms attempt to estimate the depth of objects in an image based on parallax from other measurement images of the same scene. Though this has been a central focus of research for decades, it is still an open problem; existing methods have several problems, for example: computational efficiency, handling of object edges and occlusions, and robustness to noise. MVDES (Multi-View Depth Estimation Sandbox) is a collection of tools for developing and evaluating depth estimation algorithms by producing synthetic datasets. It consists of three components: an interactive sandbox that can generate and render scenes, as well as display the real-time output of a selected depth estimation algorithm and produce test case "snapshots"; an application that allows the user to view their saved snapshots; and a benchmarking program that evaluates several depth estimation algorithms on the snapshots.

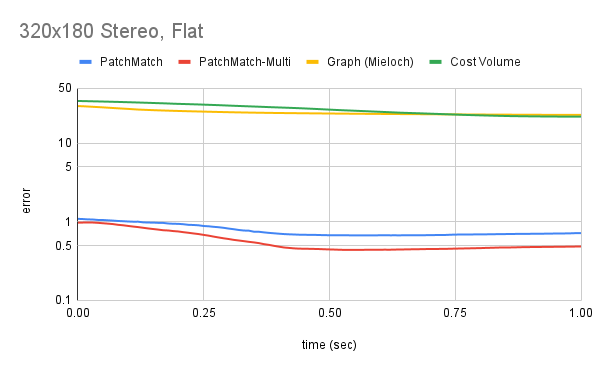

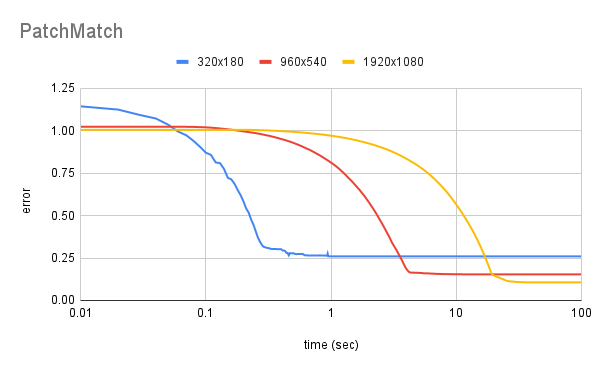

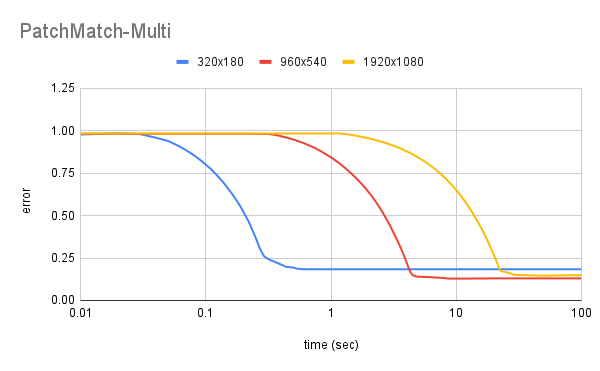

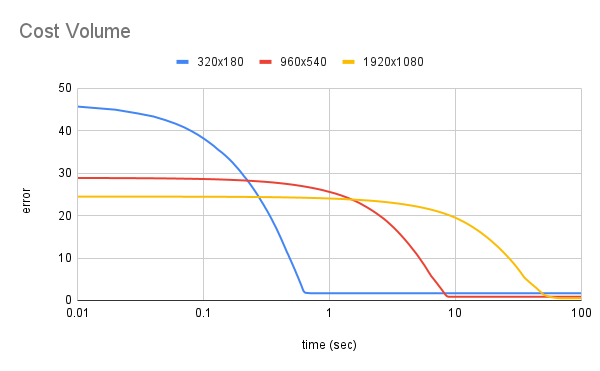

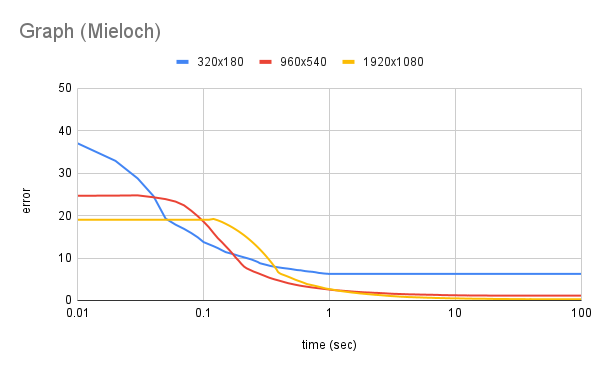

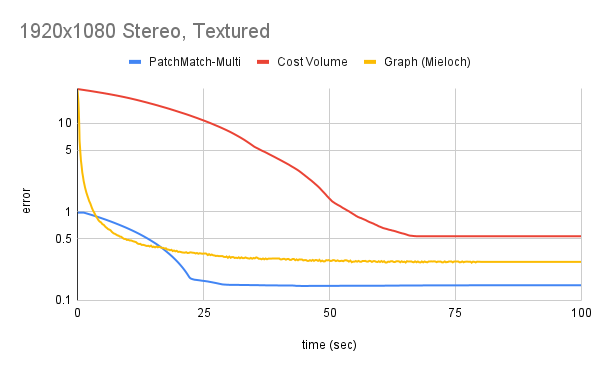

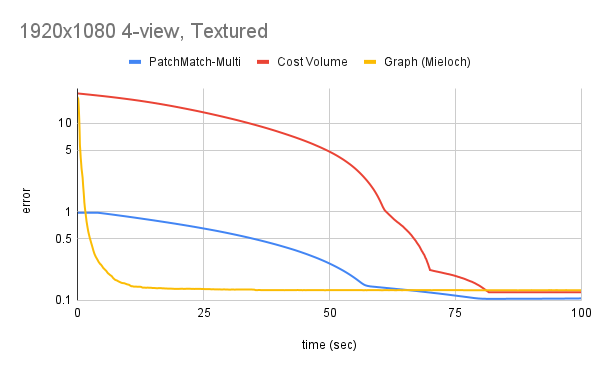

The current implementation includes several depth estimation algorithms: a basic cost volume approach, stereo PatchMatch disparity-based depth estimation, n-view PatchMatch, a randomized version of the algorithm from Graph-based multiview depth estimation using segmentation (Mieloch et al. 2017), and several other experimental algorithms based off of these. Preliminary comparisons were done using the first four algorithms on sets of test cases collected using the interactive sandbox. Several parameters were varied: image resolution (320x180, 960x540, 1920x1080), surface texturing (enabled/disabled), and number of secondary views (1 [stereo] or 4). Overall, increasing the input resolution and number of secondary views and enabling surface texturing led to better results (lower estimation error). A notable takeaway from the experiments is that PatchMatch is an extremely effective algorithm; in every case, it had the lowest final error, and it was usually the fastest. Also, though it isn't necessarily apparent from the description, Mieloch et al.'s algorithm is essentially a fast approximation of the cost volume approach; hence, they often had similar asymptotic performance, though the approximation is significantly faster.

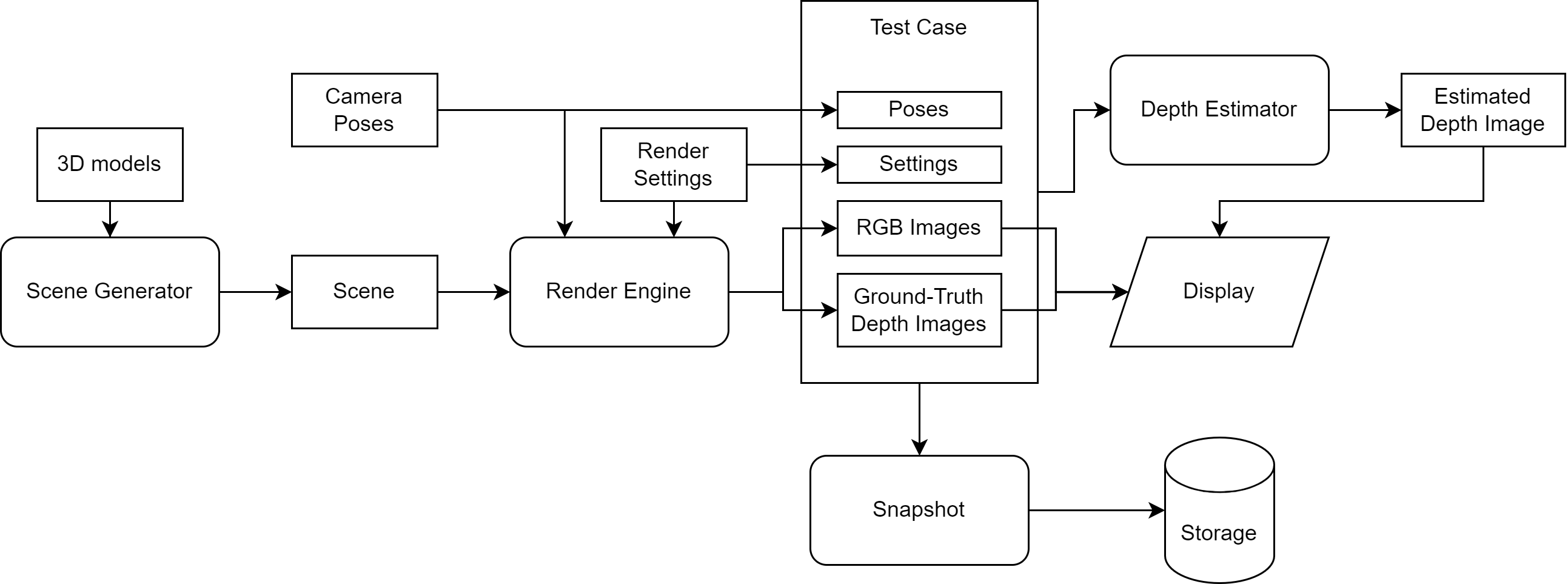

MVDES has three modes of operation. The interactive sandbox generates random scenes using models loaded from a user directory and allows the user to move the camera and adjust render settings. For development, the user can watch the depth estimation algorithms work in real-time based on the current view; for benchmarking, the current view can be saved as a "snapshot" for later evaluation. The snapshot viewer allows the user to load and view their saved snapshots. The evaluator is a batch-mode tool that evaluates a set of estimation algorithms against a set of saved snapshots and reports average error scores over time. The data flow of the interactive sandbox is shown below:

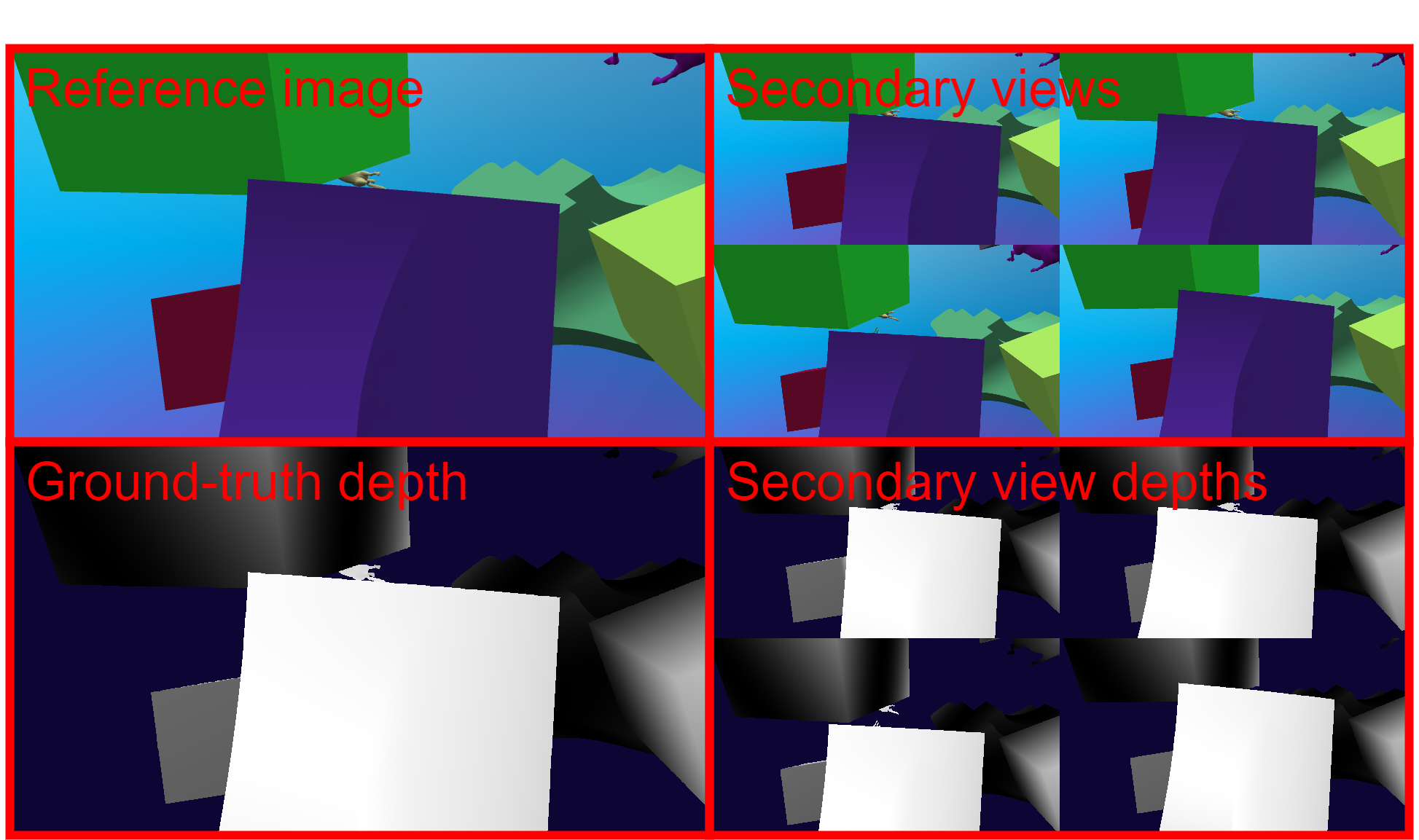

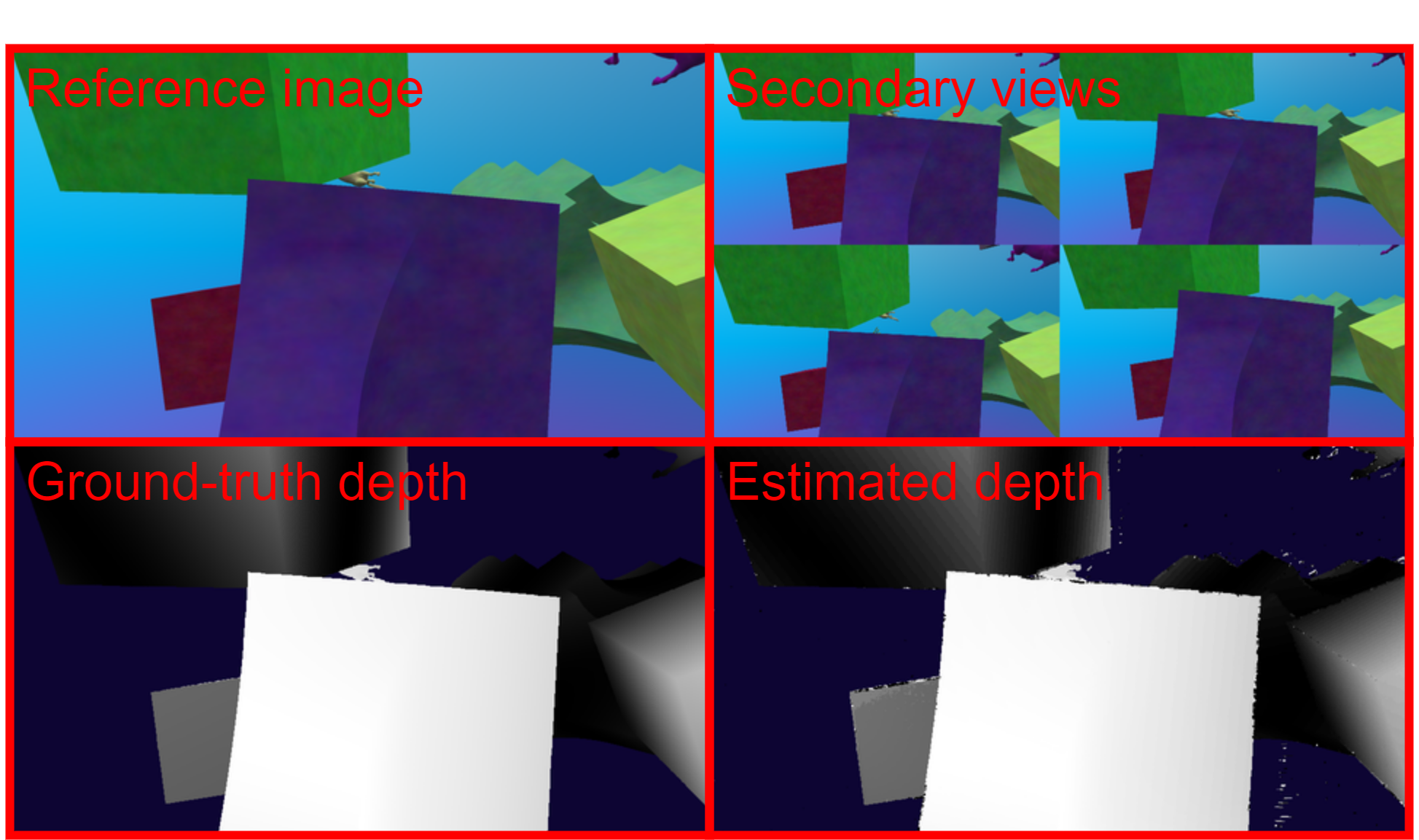

The viewer and evaluator both implement approximately a subset of the above behavior. The below images are screenshots taken from the sandbox program; the first shows the ground-truth view, while the second shows estimated depth using PatchMatch-Multi.

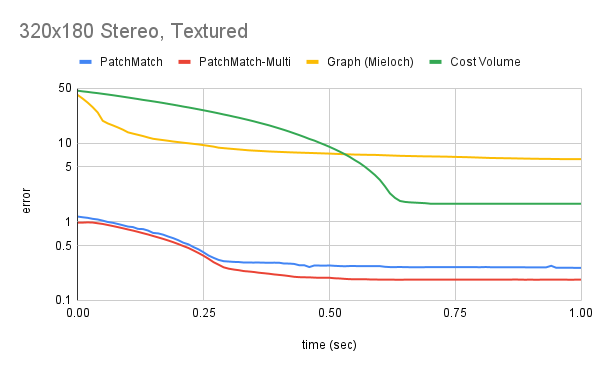

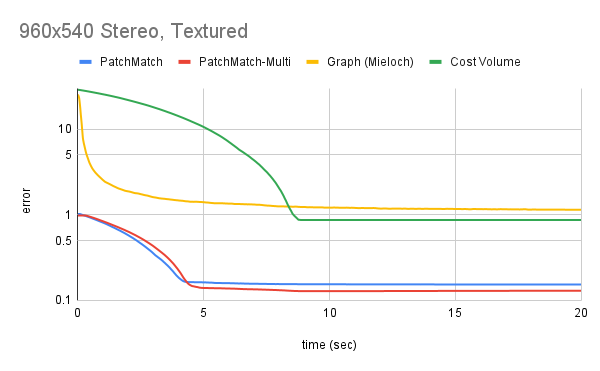

Performance was measured using average relative error (percentage error of each estimated depth value, normalized over the image size). Four algorithms were tested: 7x7 stereo PatchMatch, 7x7 n-view PatchMatch, 256-value logarithmic Cost Volume, and 2500-segment randomized Mieloch's algorithm. Several parameters were varied to observe their effect on the estimation runtime and accuracy. First, enabling surface textures improves performance, since it makes the correspondence problem much easier:

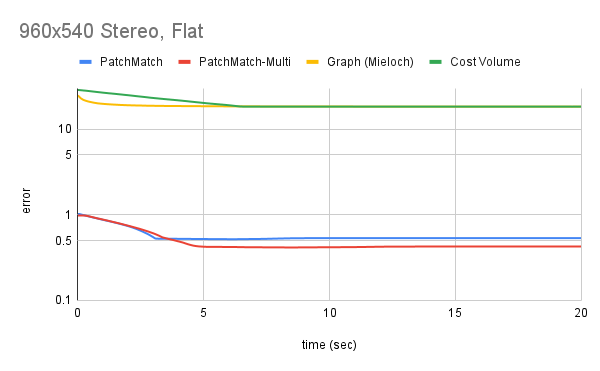

Increasing resolution improves performance, though most algorithms have runtime linear in the number of pixels:

Finally, for algorithms that can process more than one measurement image, increasing the number of secondary views increases accuracy at a small runtime cost:

There are a couple quick takeaways from these figures: first, PatchMatch is a highly effective algorithm, yielding the lowest error and often the fastest convergence; second, Mieloch et al.'s algorithm can be interpreted as an segment-based approximation of the cost volume approach. Though its runtime is technically linear in the number of pixels (due to the pixel clustering step), the constants are very low, so its speed remains high even on large images.

Even with these highly-effective algorithms, the final error rates were usually greater than 10%. Qualitatively, the error seems to come from three sources. Object boundaries (and occlusions) were the primary cause of this error, which none of the tested algorithms could properly resolve. Fortunately, the error resulting from this usually decreases proportionally as image resolution increases. Another issue was the fact that PatchMatch, as described in the original paper, cannot handle sub-pixel disparity; thus, it necessarily has some error, which generally shows up as wavy patterns throughout the image (as disparity goes from e.g. 1 to 2 pixels, the error is greatest where the value should be 1.5). Finally, textureless regions always cause issues, since these algorithms only use local information to assign depth/disparity values. When a surface has uniform color, a wide range of depth/disparity values will result in the same cost and the algorithm cannot determine the correct value.